China Has a Problem With AI Fraud. Can a Browser Plugin Solve It?

The rise of artificial intelligence is driving rapid changes in industries across the economy. And organized crime is no exception.

This year, China has witnessed a surge in fraud cases involving AI, as scammers use new content generation tools to swindle consumers – with one case in Hong Kong involving over $25 million.

Is the person you’re talking to really who they say they are? As fraudsters adopt deepfake technology, it’s becoming almost impossible to tell — even if you’re talking with them via a live video call.

But the Beijing-based startup RealAI believes it has found a solution to help protect consumers from “face-swapping” scams: a browser plugin that detects AI-generated content in real time.

The company announced on Friday that it has begun beta testing the software, which it has named RealBelieve. The company has yet to confirm when it will be released to the public.

The tool works similarly to an antivirus program, scanning text, images, and videos in real time while a user browses a webpage or chats on a Zoom call — and then pinging an alert if it detects any AI-generated content. It will be available both as a software program and a browser plugin.

The tool is a new departure for RealAI. The startup has specialized in AI security technology since it was founded in 2018, but previously it focused on providing solutions to businesses rather than consumers.

Its first products focused on helping companies in various industries — including major Chinese banks, mobile networks, and technology firms — protect their platforms and facial recognition systems from deepfakes, according to RealAI.

Xiao Zihao, the company’s co-founder, said that they decided to pivot to consumer-facing products when they saw the launch of OpenAI’s video-generation tool Sora in February.

After working on AI fraud for years, RealAI immediately realized that an AI video tool as powerful as Sora could be a game-changer for scammers, Xiao told Sixth Tone.

“AI-powered fraud is nothing new, but the debut of Sora around the Spring Festival made me feel that there would be a surge in new threats,” he said.

It took the company just three months to develop RealBelieve, Xiao said. Most of the work focused on compressing and streamlining their existing detection tools, allowing them to run in real time on a regular computer.

“We’ve dedicated time to tackle the challenge of slimming down our model so that it can run faster and use significantly fewer resources without compromising performance,” Xiao said.

RealBelieve can flag content that is over 90% AI-generated within milliseconds, the company claims, which enables users to “make video calls under an ‘AI security guarantee.’”

The startup is far from alone in sounding the alarm about AI fraud. China’s Ministry of Security recently warned the public about the growing problem of scammers using deepfake technology to disguise their identities and convince people to hand over large sums of money — with some victims losing millions of yuan.

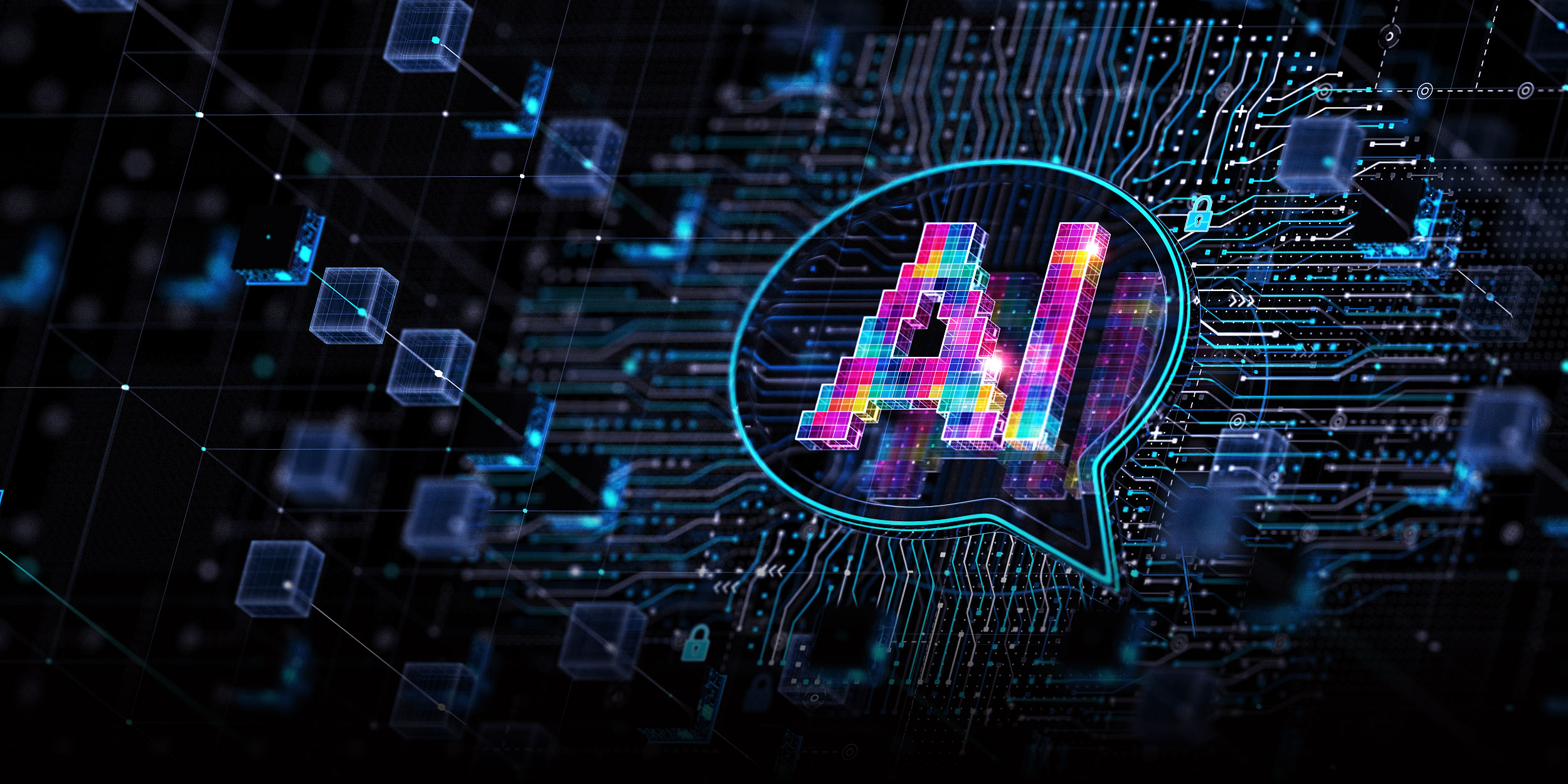

According to RealAI, cases involving AI fraud caused 16.7 million yuan ($2.3 million) in losses in 2023. This year, that figure has already surged to 185.7 million yuan.

Other Chinese tech companies are also working on anti-deepfake solutions. ZOLOZ Deeper, a tool developed by the fintech giant Ant Group, is designed to prevent people from using deepfakes to hack into facial recognition systems. And the academic database CNKI has also introduced a system to detect AI-generated content in research papers.

But the real-time detection offered by RealBelieve is a step forward for the industry, Xiao Yanghua, a professor of computer science at Shanghai’s Fudan University, told Sixth Tone.

“Using the traditional detection tools could take up to a minute, but now it may only take a few seconds or milliseconds,” said Xiao Yanghua.

However, much more needs to be done to combat AI fraud, Xiao stressed; though detection tools are useful, they can only mitigate the problem.

“Once AI develops, fraud will inevitably become a social issue,” said Xiao. “And this problem can only be temporarily addressed using technological means; it can’t be fundamentally solved. As the saying goes, ‘Virtue is one foot tall, but the devil is 10 feet tall.’ Fundamental solutions to this problem still require regulatory action.”

For Xiao Zihao, the RealAI co-founder, it’s far from easy to keep up with the development of AI fraud. The company’s biggest challenge currently is that criminals are increasingly combining human and AI-generated content, which makes it much harder for detection algorithms to spot fraudulent activity.

“It’s developing at a really fast pace,” Xiao said. “Sometimes it goes beyond your expectations.”

(Header image: VCG)