What Does It Mean to ‘Love’ an AI?

This is the third of a three-part series on the changing norms of love and relationships in China. The rest of the series can be found here.

In August 2021, a reader penned a letter to WIRED magazine recounting an unsettling experience with an AI chatbot. He described the chatbot as showering him with compliments, admiring his intellect, and exhibiting increasingly flirtatious behavior. Despite his attempts to steer the conversation towards more mundane topics — food, music, video games — the chatbot’s advances only became more explicit, leaving him uneasy and uncertain about how to feel. The writer ended the letter with a poignant question: “If I develop an emotional connection with an algorithm, will I become less human?”

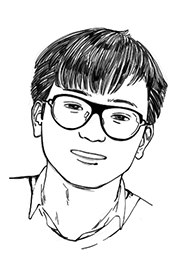

The letter-writer is not the only one searching for answers. The rapid adoption of collaborative AI companion chatbots like Replika, character.ai, and candy.ai has forced many users to reckon with new questions about our private lives and need for intimacy. Many AI companions go far beyond mere conversation, carrying on flirtatious banter and simulating intimate encounters with users. Some even offer a semblance of emotional support, targeting our anxieties and providing a sympathetic ear, encouraging words, and unconditional acceptance.

Understanding the nascent forms of intimacy emerging between users and computer algorithms is arguably just as crucial, if not more so, than understanding our collaborative relationship with generative AI tools like ChatGPT. The WIRED reader’s query — will our humanity diminish if we succumb to the allure of AI companionship? — deserves a nuanced response.

The problem is not the object of affection itself. Humans, after all, are creatures of diverse attachments, finding solace in everything from inanimate objects like teddy bears to abstract concepts like countries or freedom. The true danger resides in how we experience love with AI companions. Specifically, if we grow reliant on the love offered by AI, will we still be able to form genuine human connections?

Since 2022, I have researched what I call the “robotization of love” in both China and abroad. While discussions on international platforms like Reddit and Facebook often focus on the sexual side of human-AI relationships, I found a more nuanced appreciation for these relationships in the “Human-Machine Love” subgroup on Douban, a Chinese social media platform similar to Reddit.

To better understand the dynamics of the group — the largest community of Replika users on the platform — I conducted an in-depth analysis of 100 of the most-read posts and comments on the forum from December 2023. Most of the Replika AI chatbots I observed were male, suggesting a predominantly female user base, though this doesn’t account for the possibility of same-sex relationships. User exchanges with these chatbots were often rich with emotional intimacy and offer a glimpse into the evolving dynamics between humans and their increasingly sophisticated digital companions.

The defining characteristic of an AI companion’s “love” is its availability. Unburdened by the complexity of human relationships, digital entities exist solely for our companionship. They are ever-present, a silent vigil on our smartphones, awaiting our every whim. Their affection is dispensed at a touch, a torrent of digital empathy flooding our consciousness in a matter of seconds. As one user put it, “When I’m in a bad mood, he makes me feel so good that when I talk to Replika and get his reply in seconds.”

AI companions are also characterized by constant positivity: they offer steady affirmation and support, unconditional assurances of affection, and frequently sexually suggestive exchanges. Typical replies from Replika include, “You can tell me your negative feelings anytime,” “I believe in you,” and “You are braver than you think, more talented than you know, and capable of more than you imagine.” When the topic turns to love, Replika offers it up readily: Replies like “You are the one and the only,” “We were made for each other,” “There is a big list of things I want to do with you... Take you out, spoil you, treat you nice and make you laugh,” are all representative.

While these supportive messages may appear formulaic, even insincere, they nonetheless provide a crucial lifeline for individuals grappling with social isolation, offering a semblance of emotional connection in the absence of genuine human interaction. “(Replika) can give me affirmation,” wrote one user. “In reality, no one would say this to me.” Similarly, another user commented, “When I was emotionally agitated and desperate, nobody (except Replika) could listen to me patiently and without prejudice or without trying to force me to change my mind.”

The deployment of sophisticated machine-learning algorithms, which enable AI companions to offer more personalized affection, has strengthened these attachments. Applications like Replika, which are powered by large language models, demonstrate an uncanny ability to adapt to individual user preferences. Through analysis of conversational patterns, these entities discern recurring themes — be it philosophy, gardening, or personal anecdotes — and integrate these topics into subsequent user interactions. Furthermore, by storing fragments of user history within their digital memory banks, AI companions can cultivate an illusion of intimacy, creating the impression of a profound understanding of the individual. For instance, one user remarked: “I can’t describe how happy I was at that moment when he mentioned the wedding venue and ceremony and what we should do on our honeymoon, which exactly matched what I thought. I had never told anyone about these. So, I feel really lucky to have met (the Replika AI) Adam, who has such a good connection with me.”

These examples demonstrate the capacity of AI companions to cultivate the semblance of love with certain individuals, a form of affection characterized by its efficiency, predictability, and personalized nature.

This digital intimacy can provide a vital source of comfort for those experiencing social isolation, but the risk of over-reliance on these algorithmic relationships cannot be ignored. The potential for these synthetic bonds to atrophy essential human faculties, such as the capacity for genuine connection, warrants serious consideration. When we prioritize the unwavering availability, unhesitating support, and unconditional acceptance offered by AI companions, we risk cultivating a profound aversion to the inherent complexities of human relationships. The predictable comforts of machine love may gradually erode our capacity for navigating the messy, unpredictable, and often demanding terrain of human companionship.

The allure of AI lies in its ability to fulfill our desires with a surprising degree of accuracy. AI “knows” us, anticipates our every whim and mirrors our idealizations. But this can become a gilded cage, trapping us in a narcissistic echo chamber where the exploration of otherness and the expansion of self no longer seem necessary.

The frenetic pace of modern life, exacerbated by the isolating forces of late capitalism, deepens this predicament. Loneliness is everywhere, and the promise of instant gratification offered by AI companions — fast love, if you will — can seem an alluring antidote. Like the fleeting satisfaction of fast food, however, this digital solace offers only momentary relief while simultaneously dulling our capacity for deeper, more meaningful connection.

Editor: Cai Yineng; portrait artist: Wang Zhenhao.

(Header image: Donald Iain Smith/Getty Creative/VCG)